Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

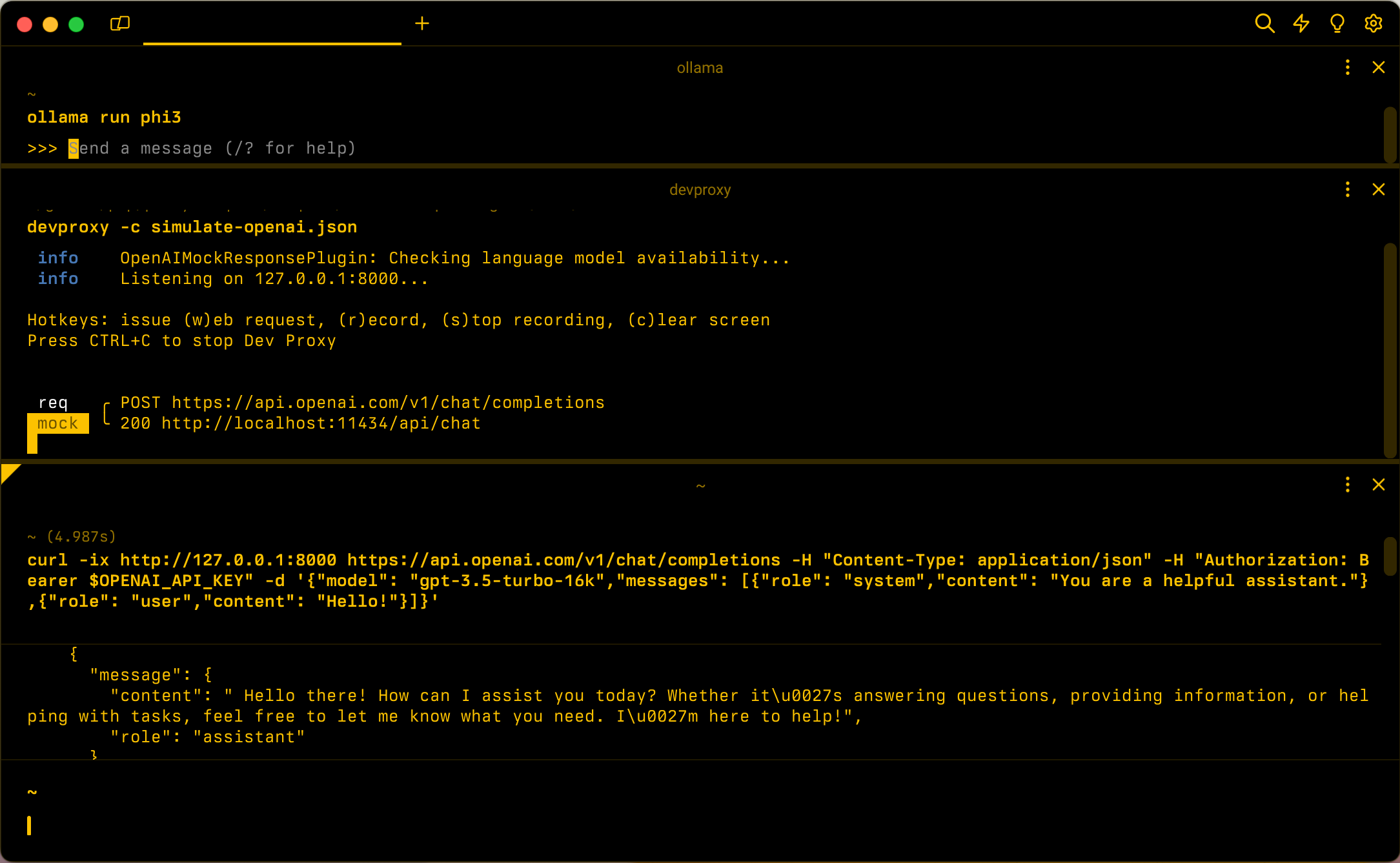

When you build apps connected to OpenAI, often, only a portion of the app interacts with the OpenAI API. When you work on the portions of the app that don't require real replies from OpenAI API, you can simulate the responses using Dev Proxy. Using simulated responses allows you to avoid incurring unnecessary costs. The OpenAIMockResponsePlugin uses a local language model to simulate responses from OpenAI API.

Before you start

To simulate OpenAI API responses using Dev Proxy, you need a supported language model client installed on your machine.

By default, Dev Proxy uses the llama3.2 language model running on Ollama. To use a different client or model, update the language model settings in the Dev Proxy configuration file.

Configure Dev Proxy to simulate OpenAI API responses

Tip

Steps described in this tutorial are available in a ready-to-use Dev Proxy preset. To use the preset, in command line, run devproxy preset get simulate-openai, and follow the instructions.

To simulate OpenAI API responses using Dev Proxy, you need to enable the OpenAIMockResponsePlugin in the devproxyrc.json file.

{

"$schema": "https://raw.githubusercontent.com/dotnet/dev-proxy/main/schemas/v0.27.0/rc.schema.json",

"plugins": [

{

"name": "OpenAIMockResponsePlugin",

"enabled": true,

"pluginPath": "~appFolder/plugins/dev-proxy-plugins.dll"

}

]

}

Next, configure Dev Proxy to intercept requests to OpenAI API. OpenAI recommends using the https://api.openai.com/v1/chat/completions endpoint, which allows you to benefit from the latest models and features.

{

// [...] trimmed for brevity

"urlsToWatch": [

"https://api.openai.com/v1/chat/completions"

]

}

Finally, configure Dev Proxy to use a local language model.

{

// [...] trimmed for brevity

"languageModel": {

"enabled": true

}

}

The complete configuration file looks like this.

{

"$schema": "https://raw.githubusercontent.com/dotnet/dev-proxy/main/schemas/v0.27.0/rc.schema.json",

"plugins": [

{

"name": "OpenAIMockResponsePlugin",

"enabled": true,

"pluginPath": "~appFolder/plugins/dev-proxy-plugins.dll"

}

],

"urlsToWatch": [

"https://api.openai.com/v1/chat/completions"

],

"languageModel": {

"enabled": true

}

}

Simulate OpenAI API responses

Assuming the default configuration, start Ollama with the llama3.2 language model. In the command line, run ollama run llama3.2.

Next, start Dev Proxy. If you use the preset, run devproxy -c "~appFolder/presets/simulate-openai/simulate-openai.json. If you use a custom configuration file named devproxyrc.json, stored in the current working directory, run devproxy. Dev Proxy checks that it can access the language model on Ollama and confirms that it's ready to simulate OpenAI API responses.

info OpenAIMockResponsePlugin: Checking language model availability...

info Listening on 127.0.0.1:8000...

Hotkeys: issue (w)eb request, (r)ecord, (s)top recording, (c)lear screen

Press CTRL+C to stop Dev Proxy

Run your application and make requests to the OpenAI API. Dev Proxy intercepts the requests and simulates responses using the local language model.

Next step

Learn more about the OpenAIMockResponsePlugin.

Samples

See also the related Dev Proxy samples: